|

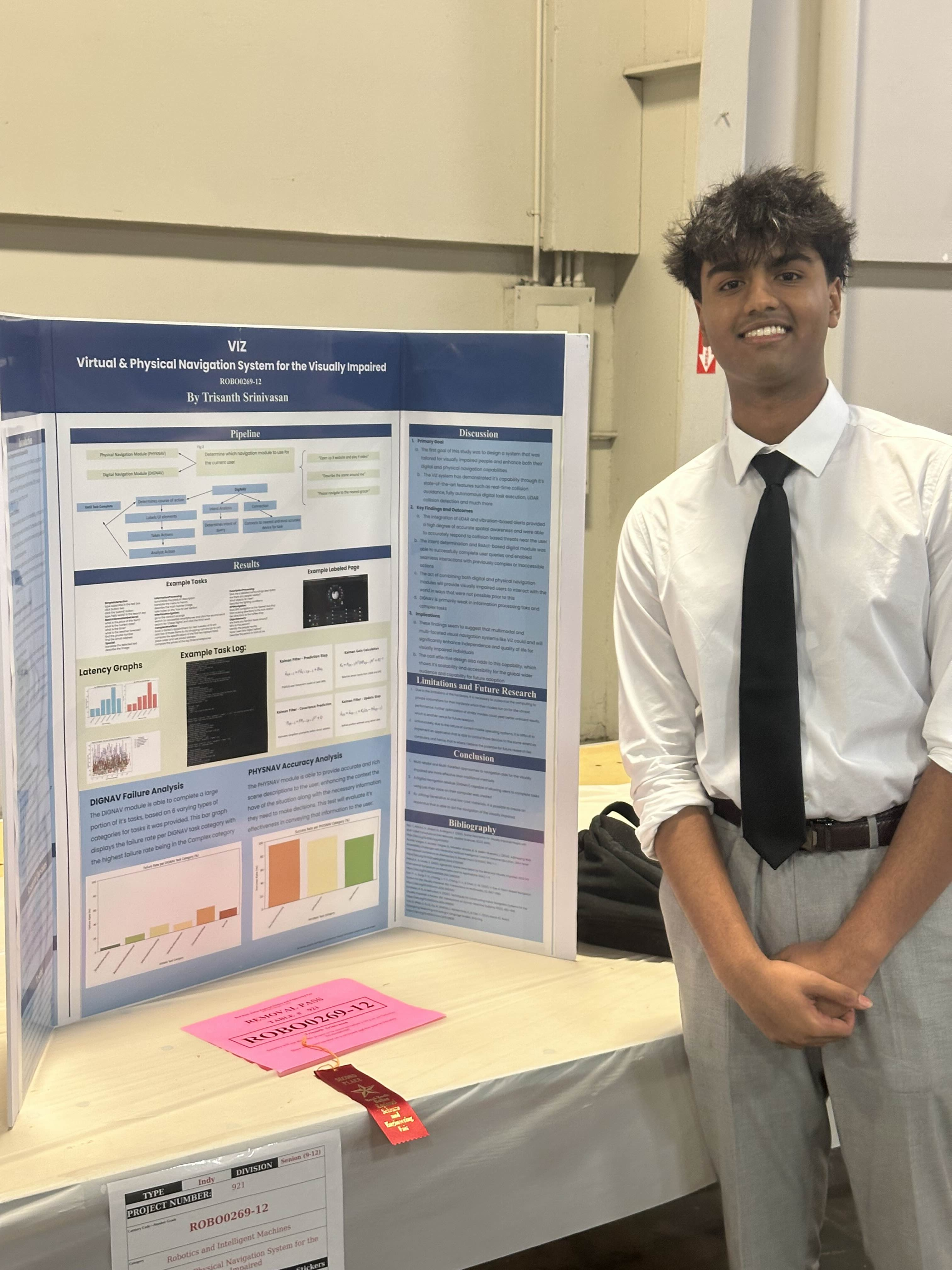

Email: trisanth[at]cyrionlabs[dot]org I am currently a research intern at NYU mLab and a Machine Learning Researcher and Co-Founder at Cyrion Labs. My work focuses on human-computer interaction, visual language models, bias, and applied AI. Previously, I contributed to scalable systems and AI-driven platforms in roles ranging from Full-Stack Developer to CTO in startups and established companies. In my free time, I volunteer as a computer refurbishing technician making computers more accessible for the visually impaired. |

|

|

|

|

April 2025: One Paper (PhysNav-DG: A Novel Adaptive Framework for Robust VLM-Sensor Fusion in Navigation Applications) Accepted to DG-EBF @ IEEE CVPR 2025 April 2025: One Paper (Towards Leveraging Semantic Web Technologies for Automated UI Element Annotation) Accepted to IEEE ICICT 2025March 2025: Second Demo (VIZ: Virtual & Physical Navigation System for the Visually Impaired) Accepted to IEEE CVPR 2025 March 2025: One Demo (GenECA: A Generalizable Framework for Real-Time Multimodal Embodied Conversational Agents) Accepted to IEEE CVPR 2025 March 2025: Joined NYU mLab as a Research Intern. I will be working on network filtering technology in K-12 Environments March 2025: One PrePrint Paper Published on arXiv |

|

|

|

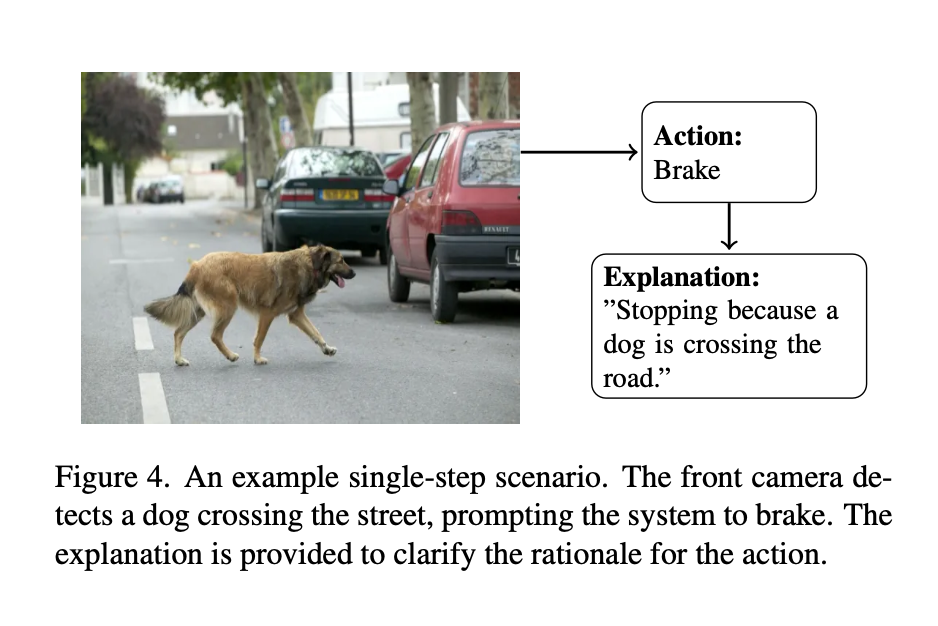

Trisanth Srinivasan, Santosh Patapati IEEE Conference on Computer Vision and Pattern Recognition Workshops (DG-EBF) 2025 [PDF] Introduces an adaptive framework that fuses visual language models with sensor data, enhancing navigation in complex, dynamic environments. |

|

|

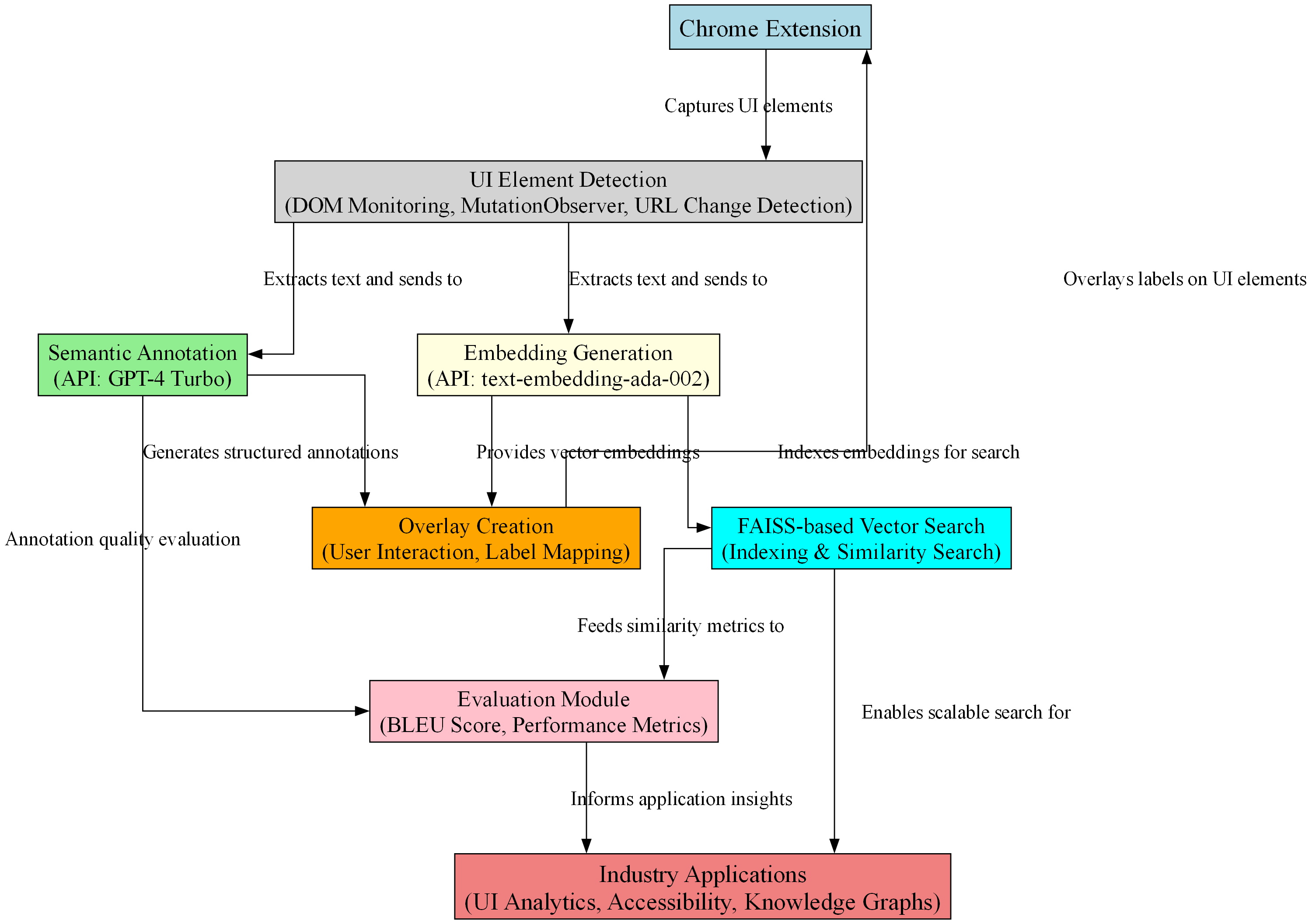

Trisanth Srinivasan IEEE International Conference on Inventive Computation Technologies 2025 [PDF] Proposes a novel approach to automated UI element annotation using semantic web technologies, enhancing accessibility and usability of web interfaces. |

|

|

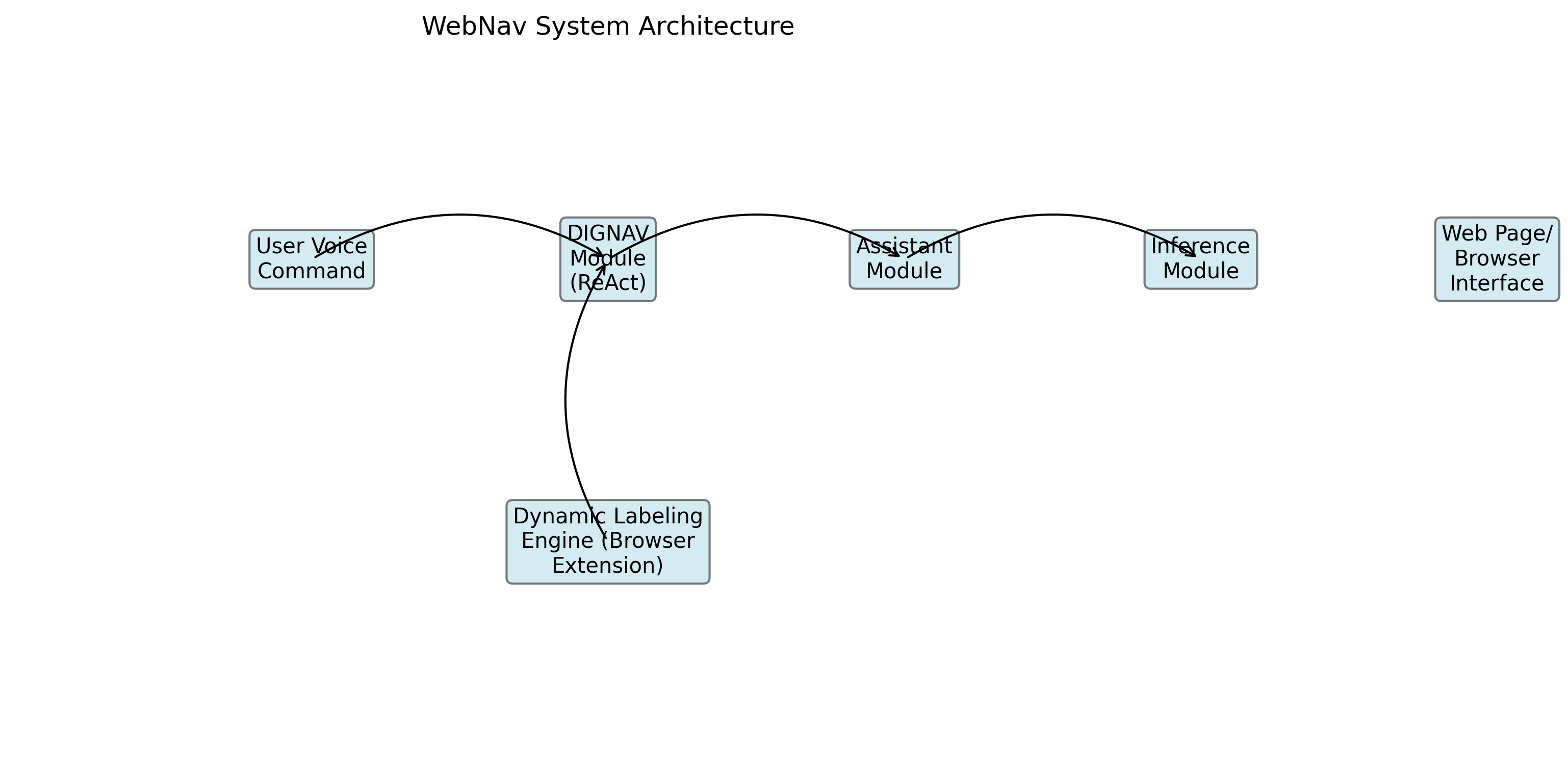

Trisanth Srinivasan, Santosh Patapati Preprint, arXiv:2503.13843 [PDF] Presents a novel voice-controlled navigation agent using a ReAct-inspired architecture, offering improved accessibility for the visually impaired. |

|

|

Santosh Patapati, Trisanth Srinivasan IEEE/CVF Conference on Computer Vision and Pattern Recognition 2025 (Demo) [Video] Introduces a robust framework for multimodal interactions with embodied conversational agents, emphasizing emotion-sensitive interaction. |

|

|

Trisanth Srinivasan, Santosh Patapati IEEE/CVF Conference on Computer Vision and Pattern Recognition 2025 (Demo) [Poster] Addresses the challenges faced by the visually impaired by utilizing generative AI to mimic human behavior for complex digital tasks and physical navigation. |

|

The website template was adapted from Yu Deng. |